The Architecture Behind ETL That Moves the Business Forward

As data-driven enterprises, the ability to process and analyze terabytes of data efficiently becomes a strategic differentiator. Extract, Transform, Load (ETL) pipelines serve as the backbone of modern analytics platforms, but traditional approaches often fail to scale when confronted with the complexity and velocity of enterprise workloads.

This article outlines the principles, architectural patterns, and best practices for designing ETL pipelines that deliver reliability, scalability, and performance at enterprise scale.

Core principles of enterprise-scale ETL

1. Separation of Concerns

- Decouple data ingestion from data processing to improve fault tolerance and scalability.

- Use event-driven pipelines (e.g., Kafka, Kinesis, Pub/Sub) rather than monolithic scheduled jobs.

2. Durable, Immutable Storage

- Store raw data in cloud-native data lakes (Amazon S3, Azure Data Lake, Google Cloud Storage).

- Adopt columnar file formats such as Parquet for efficient query performance.

- Layer storage with transactional frameworks like Delta Lake, Apache Iceberg, or Hudi.

3. Distributed and Parallel Processing

- Employ distributed frameworks such as Apache Spark, Flink, or Beam for large-scale transformations.

- Enable autoscaling on platforms like Databricks, EMR, or Dataflow to manage variable workloads.

- Optimize small-file handling and leverage partitioning strategies.

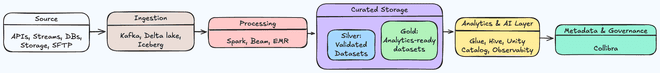

4. Layered Data Architecture

- Bronze Layer: Raw, immutable ingestion.

- Silver Layer: Standardized and validated datasets.

- Gold Layer: Business-ready datasets optimized for analytics and machine learning.

This structured approach separates concerns, ensures data quality, and provides a clear path from raw input to actionable insights.

5. Metadata and Governance

- Implement schema registries for data evolution.

- Maintain a centralized catalog (AWS Glue, Hive Metastore, Unity Catalog).

- Ensure data lineage, observability, and governance are first-class citizens of the design.

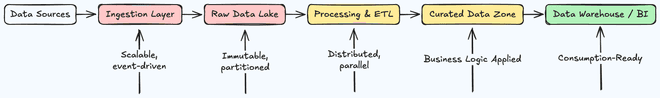

High-Level ETL Pipeline Architecture

Below explains the flow of data from diverse sources through ingestion, storage, processing, and curation, before reaching analytics platforms.

High level archtecture for ETL

Cloud native architecture for ETL

ETL pipelines transcend tactical engineering - they become a core enabler of business intelligence, advanced analytics, and AI initiatives. By focusing on decoupled architectures, distributed processing, layered data models, and robust governance, organizations can build ETL systems that are not only technically resilient but also strategically aligned with enterprise growth.

Author:

Rahul Majumdar